|

Canopy

1.0

The header-only random forests library

|

|

Canopy

1.0

The header-only random forests library

|

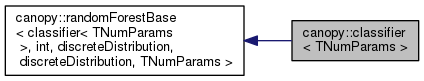

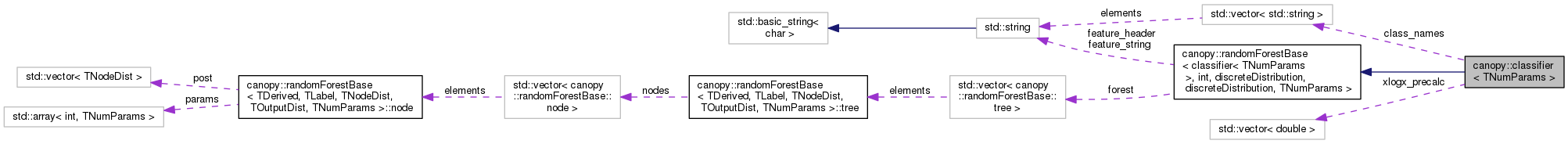

Implements a random forest classifier model to predict a discrete output label. More...

#include <classifier.hpp>

Public Member Functions | |

| classifier (const int num_classes, const int num_trees, const int num_levels, const double info_gain_tresh=C_DEFAULT_MIN_INFO_GAIN) | |

| Full constructor. More... | |

| classifier () | |

| Default constructor. More... | |

| int | getNumberClasses () const |

| Get the number of classes in the discrete label space of the model. More... | |

| void | setClassNames (const std::vector< std::string > &new_class_names) |

| Set the class name strings. More... | |

| void | getClassNames (std::vector< std::string > &end_class_names) const |

| Get the class name strings. More... | |

| void | raiseNodeTemperature (const double T) |

| Smooth the distributions in all of the leaf nodes using the softmax function. More... | |

Public Member Functions inherited from canopy::randomForestBase< classifier< TNumParams >, int, discreteDistribution, discreteDistribution, TNumParams > Public Member Functions inherited from canopy::randomForestBase< classifier< TNumParams >, int, discreteDistribution, discreteDistribution, TNumParams > | |

| randomForestBase (const int num_trees, const int num_levels) | |

| Full constructor. More... | |

| bool | readFromFile (const std::string filename, const int trees_used=-1, const int max_depth_used=-1) |

| Read a pre-trained model in from a file. More... | |

| bool | writeToFile (const std::string filename) const |

| Write a trained model to a .tr file to be stored and re-used. More... | |

| bool | isValid () const |

| Check whether a forest model is valid. More... | |

| void | setFeatureDefinitionString (const std::string &header_str, const std::string &feat_str) |

| Store arbitrary strings that define parameters of the feature extraction process. More... | |

| void | getFeatureDefinitionString (std::string &feat_str) const |

| Retrieve a stored feature string. More... | |

| void | train (const TIdIterator first_id, const TIdIterator last_id, const TLabelIterator first_label, TFeatureFunctor &&feature_functor, TParameterFunctor &¶meter_functor, const unsigned num_param_combos_to_test, const bool bagging=true, const float bag_proportion=C_DEFAULT_BAGGING_PROPORTION, const bool fit_split_nodes=true, const unsigned min_training_data=C_DEFAULT_MIN_TRAINING_DATA) |

| Train the random forest model on training data. More... | |

| void | predictDistGroupwise (TIdIterator first_id, const TIdIterator last_id, TOutputIterator out_it, TFeatureFunctor &&feature_functor) const |

| Predict the output distribution for a number of IDs. More... | |

| void | predictDistSingle (TIdIterator first_id, const TIdIterator last_id, TOutputIterator out_it, TFeatureFunctor &&feature_functor) const |

| Predict the output distribution for a number of IDs. More... | |

| void | probabilityGroupwise (TIdIterator first_id, const TIdIterator last_id, TLabelIterator label_it, TOutputIterator out_it, const bool single_label, TFeatureFunctor &&feature_functor) const |

| Evaluate the probability of a certain value of the label for a set of data points. More... | |

| void | probabilitySingle (TIdIterator first_id, const TIdIterator last_id, TLabelIterator label_it, TOutputIterator out_it, const bool single_label, TFeatureFunctor &&feature_functor) const |

| Evaluate the probability of a certain value of the label for a set of data points. More... | |

| void | probabilityGroupwiseBase (TIdIterator first_id, const TIdIterator last_id, TLabelIterator label_it, TOutputIterator out_it, const bool single_label, TBinaryFunction &&binary_function, TFeatureFunctor &&feature_functor, TPDFFunctor &&pdf_functor) const |

A generalised version of the probabilityGroupwise() function that enables the creation of more general functions. More... | |

| void | probabilitySingleBase (TIdIterator first_id, const TIdIterator last_id, TLabelIterator label_it, TOutputIterator out_it, const bool single_label, TBinaryFunction &&binary_function, TFeatureFunctor &&feature_functor, TPDFFunctor &&pdf_functor) const |

A generalised version of the probabilitySingle() function that enables the creation of more general functions. More... | |

Protected Types | |

| typedef randomForestBase< classifier< TNumParams >, int, discreteDistribution, discreteDistribution, TNumParams >::scoreInternalIndexStruct | scoreInternalIndexStruct |

| Forward the definition of the type declared in the randomForestBase class. | |

Protected Member Functions | |

| void | initialiseNodeDist (const int t, const int n) |

| Initialise a discreteDistribution as a node distribution for training. More... | |

| template<class TLabelIterator > | |

| void | bestSplit (const std::vector< scoreInternalIndexStruct > &data_structs, const TLabelIterator first_label, const int, const int, const float initial_impurity, float &info_gain, float &thresh) const |

| Find the best way to split training data using the scores of a certain feature. More... | |

| void | printHeaderDescription (std::ofstream &stream) const |

| Prints a string that allows a human to interpret the header information to a stream. More... | |

| void | printHeaderData (std::ofstream &stream) const |

| Print the header information specific to the classifier model to a stream. More... | |

| void | readHeader (std::ifstream &stream) |

| Read the header information specific to the classifier model from a stream. More... | |

| float | minInfoGain (const int, const int) const |

| Get the information gain threshold for a given node. More... | |

| template<class TLabelIterator > | |

| float | singleNodeImpurity (const TLabelIterator first_label, const std::vector< int > &nodebag, const int, const int) const |

| Calculate the impurity of the label set in a single node. More... | |

| template<class TLabelIterator , class TIdIterator > | |

| void | trainingPrecalculations (const TLabelIterator first_label, const TLabelIterator last_label, const TIdIterator) |

| Preliminary calculations to perform berfore training begins. More... | |

| void | cleanupPrecalculations () |

| Clean-up of data to perform after training ends. More... | |

Protected Member Functions inherited from canopy::randomForestBase< classifier< TNumParams >, int, discreteDistribution, discreteDistribution, TNumParams > Protected Member Functions inherited from canopy::randomForestBase< classifier< TNumParams >, int, discreteDistribution, discreteDistribution, TNumParams > | |

| void | findLeavesGroupwise (TIdIterator first_id, const TIdIterator last_id, const int treenum, std::vector< const discreteDistribution * > &leaves, TFeatureFunctor &&feature_functor) const |

| Function to query a single tree model with a set of data points and store a pointer to the leaf distribution that each reaches. More... | |

| const discreteDistribution * | findLeafSingle (const TId id, const int treenum, TFeatureFunctor &&feature_functor) const |

| Function to query a single tree model with a single data point and return a pointer to the leaf distribution that it reaches. More... | |

Protected Attributes | |

| int | n_classes |

| The number of classes in the discrete label space. | |

| std::vector< std::string > | class_names |

| The names of the classes. | |

| std::vector< double > | xlogx_precalc |

| Used for storing temporary precalculations of x*log(x) values during training. | |

| double | min_info_gain |

| If during training, the best information gain at a node goes below this threshold, a lead node is declared. | |

Protected Attributes inherited from canopy::randomForestBase< classifier< TNumParams >, int, discreteDistribution, discreteDistribution, TNumParams > Protected Attributes inherited from canopy::randomForestBase< classifier< TNumParams >, int, discreteDistribution, discreteDistribution, TNumParams > | |

| int | n_trees |

| The number of trees in the forest. | |

| int | n_levels |

| The maximum number of levels in each tree. | |

| int | n_nodes |

| The number of nodes in each tree. | |

| bool | valid |

| Whether the forest model is currently valid and usable for predictions (true = valid) | |

| bool | fit_split_nodes |

| Whether a node distribution is fitted to all nodes (true) or just the leaf nodes (false) | |

| std::vector< tree > | forest |

| Vector of tree models. | |

| std::string | feature_header |

| String describing the content of the feature string. | |

| std::string | feature_string |

| Arbitrary string describing the feature extraction process. | |

| std::default_random_engine | rand_engine |

| Random engine for generating random numbers during training, may also be used by derived classes. | |

| std::uniform_int_distribution< int > | uni_dist |

| For generating random integers during traning, may also be used derived classes. | |

Static Protected Attributes | |

| static constexpr double | C_DEFAULT_MIN_INFO_GAIN = 0.05 |

| Default value for the information gain threshold. | |

Static Protected Attributes inherited from canopy::randomForestBase< classifier< TNumParams >, int, discreteDistribution, discreteDistribution, TNumParams > Static Protected Attributes inherited from canopy::randomForestBase< classifier< TNumParams >, int, discreteDistribution, discreteDistribution, TNumParams > | |

| static constexpr int | C_DEFAULT_MIN_TRAINING_DATA |

| Default value for the minimum number of traning data points in a node before a leaf is declared. | |

| static constexpr float | C_DEFAULT_BAGGING_PROPORTION |

| Default value for the proportion of the traning set used to train each tree. | |

Additional Inherited Members | |

Static Protected Member Functions inherited from canopy::randomForestBase< classifier< TNumParams >, int, discreteDistribution, discreteDistribution, TNumParams > Static Protected Member Functions inherited from canopy::randomForestBase< classifier< TNumParams >, int, discreteDistribution, discreteDistribution, TNumParams > | |

| static double | fastDiscreteEntropy (const std::vector< int > &internal_index, const int n_labels, const TLabelIterator first_label, const std::vector< double > &xlogx_precalc) |

| Calculates the entropy of the discrete labels of a set of data points using an efficient method. More... | |

| static int | fastDiscreteEntropySplit (const std::vector< scoreInternalIndexStruct > &data_structs, const int n_labels, const TLabelIterator first_label, const std::vector< double > &xlogx_precalc, double &best_split_impurity, float &thresh) |

| Find the split in a set of training data that results in the best information gain for discrete labels. More... | |

| static std::vector< double > | preCalculateXlogX (const int N) |

| Calculate an array of x*log(x) for integer x. More... | |

Implements a random forest classifier model to predict a discrete output label.

This class uses the discreteDistribution as both the output distribution and the node distribution, and int as the type of label to predict.

| TNumParams | The number of parameters used by the features callback functor. |

| canopy::classifier< TNumParams >::classifier | ( | const int | num_classes, |

| const int | num_trees, | ||

| const int | num_levels, | ||

| const double | info_gain_tresh = C_DEFAULT_MIN_INFO_GAIN |

||

| ) |

Full constructor.

Creates a full forest with a specified number of trees and levels, ready to be trained.

| num_classes | Number of discrete classes in the label space. The labels are assumed to run from 0 to num_classes-1 inclusive. |

| num_trees | The number of decision trees in the forest |

| num_levels | The maximum depth of any node in the trees |

| info_gain_tresh | The information gain threshold to use when training the model. Nodes where the best split is found to result in an information gain value less than this threshold are made into leaf nodes. Default: C_DEFAULT_MIN_INFO_GAIN |

| canopy::classifier< TNumParams >::classifier | ( | ) |

Default constructor.

Note that an object initialised in this way should not be trained, but may be used to read in a pre-trained model using readFromFile()

|

protected |

Find the best way to split training data using the scores of a certain feature.

This method takes a set of training data points and their scores resulting from some feature, and calculates the best score threshold that may be used to split the data into two partitions. The best split is the one that results in the greatest information gain in the child nodes, which in this case is based on the discrete entropy.

This method is called automatically by the base class.

| TLabelIterator | Type of the iterator used to access the discrete labels. Must be a random access iterator that dereferences to an integral data type. |

| data_structs | A vector in which each element is a structure containing the internal id (.id) and score (.score) for the current feature of the training data points. The vector is assumed to be sorted according to the score field in ascending order. |

| first_label | Iterator to the labels for which the entropy is to be calculated. The labels should be located at the offsets from this iterator given by the IDs of elements of the data_structs vector. I.e. first_label[data_structs[0].id] first_label[data_structs[1].id] |

| - | The third parameter is unused but required for compatibility with randomForestBase |

| - | The fourth parameter is unused but required for compatibility with randomForestBase |

| initial_impurity | The initial impurity of the node before the split. This must be calculated with singleNodeImpurity() and passed in |

| info_gain | The information gain associated with the best split (i.e. the maximum achievable information gain with this feature) is returned by reference in this parameter |

| thresh | The threshold value of the feature score corresponding to tbe best split is returned by reference in this parameter |

|

protected |

Clean-up of data to perform after training ends.

In this case this clears the pre-calculated array created by trainingPrecalculations()

This method is called automatically by the base class.

| void canopy::classifier< TNumParams >::getClassNames | ( | std::vector< std::string > & | class_names | ) | const |

Get the class name strings.

Retrieve a previously stored set of class names.

| class_names | The class names are returned by reference in this vector. If none have been set, an empty vector is returned. |

| int canopy::classifier< TNumParams >::getNumberClasses | ( | ) | const |

Get the number of classes in the discrete label space of the model.

|

protected |

Initialise a discreteDistribution as a node distribution for training.

This method is called automatically by the base class.

| t | Index of the tree in which the distribution is to be initialised |

| n | Index of the node to be initialised within its tree |

|

protected |

Get the information gain threshold for a given node.

In this case, this is a fixed value for all nodes. This method is called automatically by the base class.

| - | The first parameter is unused but required for compatibility with randomForestBase |

| - | The second parameter is unused but required for compatibility with randomForestBase |

|

protected |

Print the header information specific to the classifier model to a stream.

This prints out the number of classes and the class names to the stream.

This method is called automatically by the base class.

| stream | The stream to which the header is printed. |

|

protected |

Prints a string that allows a human to interpret the header information to a stream.

This method is called automatically by the base class.

| stream | The stream to which the header description is printed. |

| void canopy::classifier< TNumParams >::raiseNodeTemperature | ( | const double | T | ) |

Smooth the distributions in all of the leaf nodes using the softmax function.

This alters the probability distributions by replacing the probability of class \( i \) according to

\[ p_i \leftarrow \frac{ e^{\frac{p_i}{T}}}{\sum_{j=1}^N {e^\frac{p_j}{T}} } \]

where \( N \) is the number of classes and \( T \) is a temperature parameter. This has the effect of regularising the distributions, reducing the certainty.

| T | The temperature parameter. The higher the temperature, the more the certainty is reduced. T must be a strictly positive number, otherwise this function will have no effect. |

|

protected |

Read the header information specific to the classifier model from a stream.

This reads in the number of classes and the class names from the stream.

This method is called automatically by the base class.

| stream | The stream from which the header information is read. |

| void canopy::classifier< TNumParams >::setClassNames | ( | const std::vector< std::string > & | new_class_names | ) |

Set the class name strings.

These will be stored within the model (including when written to file) and may be retrieved at a later date, however they do not affect the operation of the model in any way and are entirely optional.

| new_class_names | Vector with each element containing the name of one class |

|

protected |

Calculate the impurity of the label set in a single node.

This method takes the labels (discrete class labels) of a set of training data points and calculates the impurity of that set. In this case, this is based on the discrete entropy of the set.

This method is called automatically by the base class.

| TLabelIterator | Type of the iterator used to access the discrete labels. Must be a random access iterator that dereferences to an integral data type. |

| first_label | Iterator to the labels for which the entropy is to be calculated. The labels should be located at the offsets from this iterator given by the elements of the nodebag vector. I.e. first_label[nodebag[0]] first_label[nodebag[1]] |

| nodebag | Vector containing the internal training indices of the data points. These are the indices through which the labels may be accessed in first_label |

| - | The third parameter is unused but required for compatibility with randomForestBase |

| - | The fourth parameter is unused but required for compatibility with randomForestBase |

|

protected |

Preliminary calculations to perform berfore training begins.

In this case this pre-calculates an array of values of x*log(x) to speed up entropy calculations.

This method is called automatically by the base class.

| TLabelIterator | Type of the iterator used to access the training labels Must be a random access iterator than dereferences to an integral data type. |

| TIdIterator | Type of the iterator used to access the IDs of the training data. The IDs are unused by required for compatibility with randomForestBase . |

| first_label | Iterator to the first label in the training set |

| last_label | Iterator to the last label in the training set |

| - | The third parameter is unused but required for compatibility with randomForestBase |

1.8.11

1.8.11